Main menu

You are here

Simple Language Benchmark

As some of you may know, the programming languages I use the most are C and Python. One reason for this is popularity - I want to learn something that will help me edit the programs I use. I also think it's good to know at least one compiled language and one interpreted language. Interpreted languages or "scripting languages" are more convenient in most respects but they take longer to run. I already knew Python would be slower than C but I wanted to see how much slower.

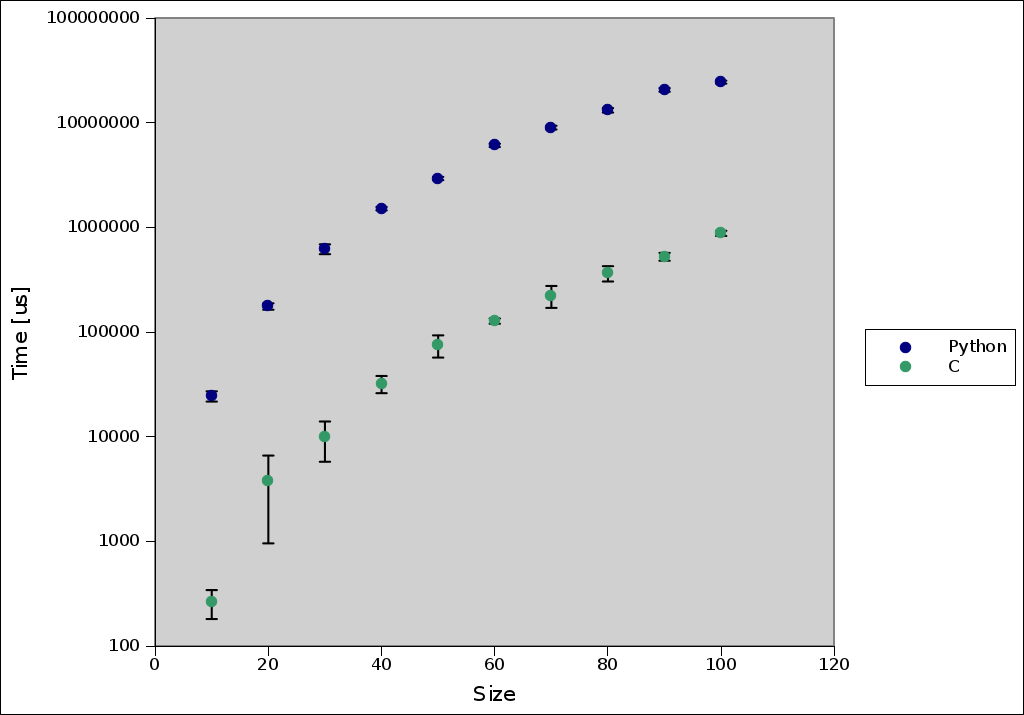

To make the above plot, I used C and Python codes to diagonalize an n by n matrix and kept track of their execution times. Once you get past the small matrices, the trend that begins to emerge is that Python is ~30 times slower than C.

Of course, there is more to say. I need to convince you that this was a fair test! Both programs were run on my ancient Pentium III laptop. My C program, matrixtimer.c was compiled with GCC and the optimization flags -march=pentium3 -Os -fomit-frame-pointer -pipe. My Python program, matrixtimer.py was byte compiled by opening a Python 2.7 shell and typing import matrixtimer. When testing each piece of code, I supplied the matrix size n, as an argument, and ran the diagonalization routine five times. Each data point in the plot shows the mean from those five trials with an error bar equal to the standard deviation.

Now there is the question of what eigenvalue algorithm I used. If you're big on numerics, look at the code here and see if you can guess! Otherwise I'll tell you in the next paragraph.

matrixtimer.c

-

#include <stdio.h> -

#include <stdlib.h> -

#include <math.h> -

#include <time.h> -

#include <sys/time.h> -

#define TOLERANCE 0.1 -

-

void find_max(double **matrix, int size, int *p, int *q) {

-

double max;

-

int i, j;

-

-

max = 0.0;

-

-

for (j = 0; j < size; j++) {

-

for (i = 0; i < j; i++) {

-

if (matrix[i][j] > max) {

-

max = matrix[i][j];

-

*p = i;

-

*q = j;

-

} -

} -

} -

} -

-

double norm(double **matrix, int size) {

-

double sum_of_squares;

-

int i;

-

-

sum_of_squares = 0.0;

-

-

for (i = 0; i < size; i++) {

-

sum_of_squares += matrix[i][i] * matrix[i][i];

-

} -

-

return sqrt(sum_of_squares / ((double) size));

-

} -

-

void get_difference(double **a, double **b, double **difference, int size) {

-

int i, j;

-

-

for (i = 0; i < size; i++) {

-

for (j = 0; j < size; j++) {

-

difference[i][j] = fabs(a[i][j] - b[i][j]);

-

} -

} -

} -

-

void transfer_diagonal_elements(double **dest, double **src, int size) {

-

int i;

-

-

for (i = 0; i < size; i++) {

-

dest[i][i] = src[i][i];

-

} -

} -

-

void diagonalize(double **matrix, double **eigenvector_matrix, int size) {

-

double **diagonal_matrix = malloc(size * sizeof(double *));

-

double **difference_matrix = malloc(size * sizeof(double *));

-

-

double sine, cosine, temp1, temp2;

-

int i, j, p, q, state;

-

-

for (i = 0; i < size; i++) {

-

diagonal_matrix[i] = calloc(size, sizeof(double));

-

difference_matrix[i] = calloc(size, sizeof(double));

-

} -

-

transfer_diagonal_elements(diagonal_matrix, matrix, size);

-

get_difference(matrix, diagonal_matrix, difference_matrix, size);

-

find_max(difference_matrix, size, &p, &q);

-

state = 1;

-

-

while (state) {

-

sine = matrix[p][q] / hypot(matrix[p][q], matrix[q][q] - matrix[p][p]);

-

cosine = (matrix[q][q] - matrix[p][p]) / hypot(matrix[p][q], matrix[q][q] - matrix[p][p]);

-

-

if (fabs(cosine) < 1e-5) {

-

cosine = 1.0 / sqrt(2.0);

-

sine = 1.0 / sqrt(2.0);

-

} -

-

for (j = 0; j < size; j++) {

-

temp1 = cosine * matrix[p][j] - sine * matrix[q][j];

-

temp2 = sine * matrix[p][j] + cosine * matrix[q][j];

-

matrix[p][j] = temp1;

-

matrix[q][j] = temp2;

-

} -

-

for (i = 0; i < size; i++) {

-

temp1 = cosine * matrix[i][p] - sine * matrix[i][q];

-

temp2 = sine * matrix[i][p] + cosine * matrix[i][q];

-

matrix[i][p] = temp1;

-

matrix[i][q] = temp2;

-

} -

-

for (i = 0; i < size; i++) {

-

temp1 = cosine * eigenvector_matrix[i][p] - sine * eigenvector_matrix[i][q];

-

temp2 = sine * eigenvector_matrix[i][p] + cosine * eigenvector_matrix[i][q];

-

eigenvector_matrix[i][p] = temp1;

-

eigenvector_matrix[i][q] = temp2;

-

} -

-

transfer_diagonal_elements(diagonal_matrix, matrix, size);

-

get_difference(matrix, diagonal_matrix, difference_matrix, size);

-

find_max(difference_matrix, size, &p, &q);

-

-

if (fabs(matrix[p][q]) < TOLERANCE * norm(diagonal_matrix, size)) {

-

state = 0;

-

} -

} -

} -

-

int main(int argc, char **argv) {

-

int i, j, size;

-

time_t t0; -

-

size = (int) strtod(argv[1], NULL);

-

-

double **matrix = malloc(size * sizeof(double *));

-

double **identity_matrix = malloc(size * sizeof(double *));

-

-

for (i = 0; i < size; i++) {

-

matrix[i] = calloc(size, sizeof(double));

-

identity_matrix[i] = calloc(size, sizeof(double));

-

} -

-

t0 = time(NULL);

-

srand(t0);

-

-

for (i = 0; i < size; i++) {

-

identity_matrix[i][i] = 1.0;

-

for (j = 0; j <= i; j++) {

-

matrix[i][j] = 9.0 * rand() / RAND_MAX;

-

matrix[j][i] = matrix[i][j];

-

} -

} -

-

struct timeval tv1;

-

struct timeval tv2;

-

int t1, t2;

-

-

gettimeofday(&tv1, NULL);

-

t1 = tv1.tv_usec + 1000000 * tv1.tv_sec;

-

diagonalize(matrix, identity_matrix, size);

-

gettimeofday(&tv2, NULL);

-

t2 = tv2.tv_usec + 1000000 * tv2.tv_sec;

-

-

printf("%d\n", t2 - t1);

-

}

matrixtimer.py

-

#!/usr/bin/python2 -

import sys

-

import math

-

import random

-

import timeit

-

-

def find_max(matrix):

-

max = 0

-

for j in range(0, len(matrix)):

-

for i in range(0, j):

-

if matrix[i][j] > max:

-

max = matrix[i][j]

-

p = i -

q = j -

return (p, q)

-

-

def norm(matrix):

-

sum = 0

-

for i in range(0, len(matrix)):

-

sum += matrix[i][i] ** 2

-

return math.sqrt(float(sum) / float(len(matrix)))

-

-

def diagonalize(matrix, eigenvector_matrix):

-

tol = 0.1

-

-

diagonal_matrix = []

-

difference_matrix = []

-

for i in range(0, len(matrix)):

-

current_diagonal_row = []

-

current_difference_row = []

-

for j in range(0, len(matrix)):

-

if i == j:

-

current_diagonal_row.append(matrix[i][j])

-

current_difference_row.append(0)

-

else: -

current_diagonal_row.append(0)

-

current_difference_row.append(matrix[i][j])

-

diagonal_matrix.append(current_diagonal_row)

-

difference_matrix.append(current_difference_row)

-

p, q = find_max(difference_matrix)

-

state = True

-

-

while state: -

sine = matrix[p][q] / math.hypot(matrix[p][q], matrix[q][q] - matrix[p][p])

-

cosine = (matrix[q][q] - matrix[p][p]) / math.hypot(matrix[p][q], matrix[q][q] - matrix[p][p])

-

-

if abs(cosine) < 1e-5:

-

sine = 1.0 / math.sqrt(2.0)

-

cosine = 1.0 / math.sqrt(2.0)

-

-

for j in range(0, len(matrix)):

-

temp1 = cosine * matrix[p][j] - sine * matrix[q][j]

-

temp2 = sine * matrix[p][j] + cosine * matrix[q][j]

-

matrix[p][j] = temp1

-

matrix[q][j] = temp2

-

-

for i in range(0, len(matrix)):

-

temp1 = cosine * matrix[i][p] - sine * matrix[i][q]

-

temp2 = sine * matrix[i][p] + cosine * matrix[i][q]

-

matrix[i][p] = temp1

-

matrix[i][q] = temp2

-

-

for i in range(0, len(matrix)):

-

temp1 = cosine * eigenvector_matrix[i][p] - sine * eigenvector_matrix[i][q]

-

temp2 = sine * eigenvector_matrix[i][p] + cosine * eigenvector_matrix[i][q]

-

eigenvector_matrix[i][p] = temp1

-

eigenvector_matrix[i][q] = temp2

-

-

for i in range(0, len(matrix)):

-

diagonal_matrix[i][i] = matrix[i][i]

-

-

for i in range(0, len(matrix)):

-

for j in range(0, len(matrix)):

-

difference_matrix[i][j] = abs(matrix[i][j] - diagonal_matrix[i][j])

-

-

p, q = find_max(difference_matrix)

-

-

if abs(matrix[p][q]) < tol * norm(diagonal_matrix):

-

state = False

-

-

matrix = []

-

identity_matrix = []

-

size = int(sys.argv[1])

-

for i in range(0, size):

-

current_row = []

-

current_identity_row = []

-

for j in range(0, size):

-

current_row.append(random.randrange(0, 9))

-

if i == j:

-

current_identity_row.append(1)

-

else: -

current_identity_row.append(0)

-

matrix.append(current_row)

-

identity_matrix.append(current_identity_row)

-

-

for i in range(0, size):

-

for j in range(i, size):

-

matrix[i][j] = matrix[j][i]

-

-

function_string = "diagonalize(" + str(matrix) + ", " + str(identity_matrix) + ")"

-

t = timeit.Timer(function_string, 'from __main__ import diagonalize')

-

time = 1000000 * t.timeit(number = 1)

-

print time

Both files generate a matrix that is symmetric but otherwise random. Then they implement Jacobi's eigenvalue algorithm from 1846 which has a special place in my heart. The symmetric eigenvalue problem came up (as it often does) in my project for a computational physics class in my third year of undergrad. The class was very introductory so no one's project was actually going to rival modern algorithms. Rather than link to an external library to diagonalize the matrix I needed, I put in that last little bit of effort to make the program self contained. Since it involves many basic numerical tasks, I thought it would be a good benchmark.

Jacobi's algorithm is indeed slower than the symmetric eigenvalue algorithms used in standard software packages like LAPACK. My undergraduate thesis supervisor even said that the LAPACK ones were far from optimal... probably because he knows Kazushige Goto (what a great name for a programmer). Nevertheless, people are still interested in the algorithm because it can easily be split into several parallel jobs. A quick look through the C and Python examples should reveal several operations that can be done concurrently.